compare FreeBSD and Linux TCP Congestion Control algorithms over emulated 1Gbps x 40ms WAN

Contents

Emulab environment

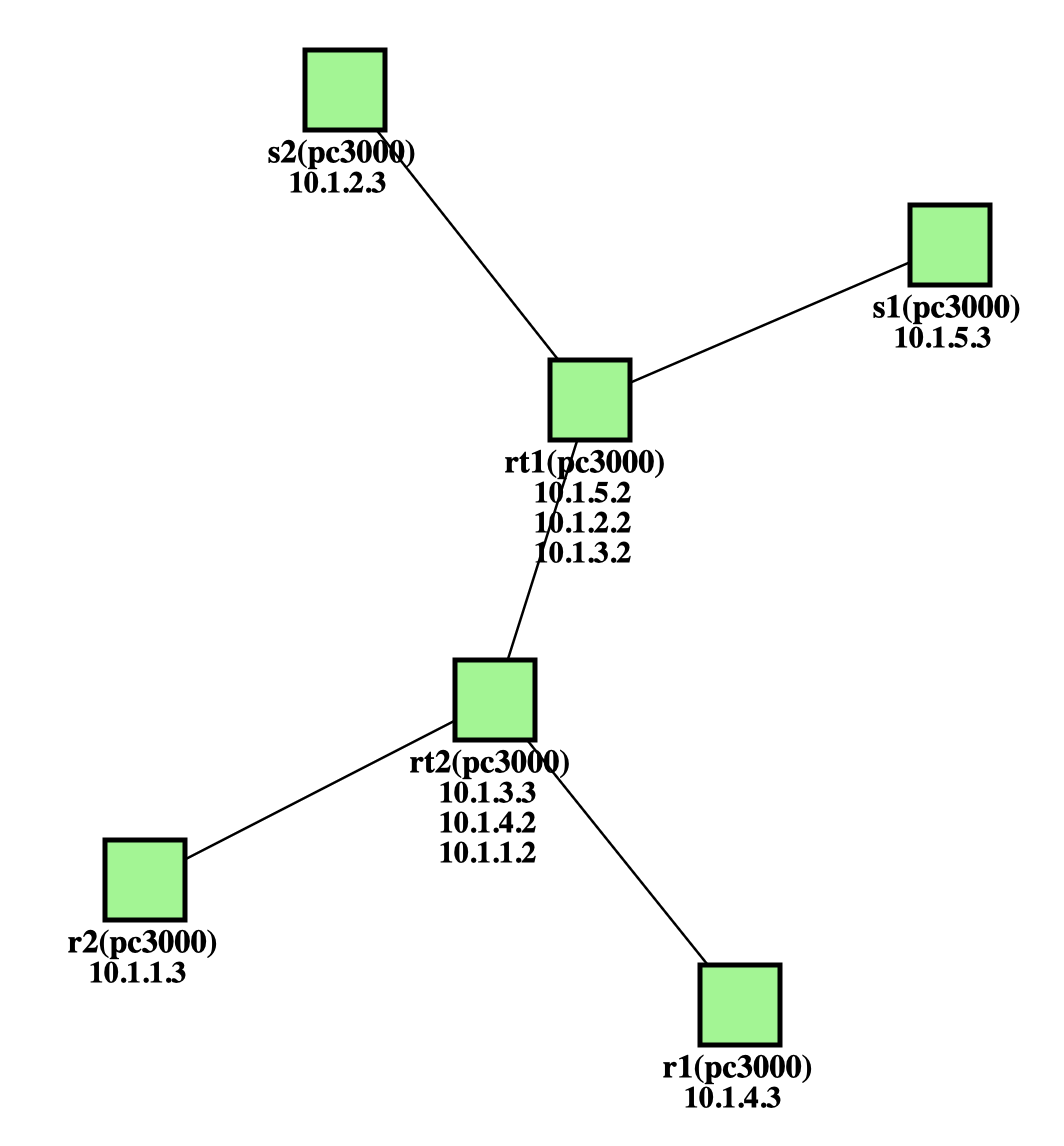

The testbed, configured in a dumbbell topology (pc3000 nodes with 1Gbps links), is sourced from Emulab.net: Emulab hardware.

The topology consists of a dumbbell structure with two nodes (s1, s2) acting as TCP traffic senders using iperf3, two nodes (rt1, rt2) functioning as routers with a single bottleneck link, and two nodes (r1, r2) serving as TCP traffic receivers.

The sender nodes (s1, s2) are used to test TCP congestion control algorithms on different operating systems, such as FreeBSD and Ubuntu Linux. The receiver nodes (r1, r2) run Ubuntu Linux 22.04.

The router nodes (rt1, rt2) operate on Ubuntu Linux 18.04 with shallow TX/RX ring buffers configured to 128 descriptors, and the L3 buffer set to 128 packets, resulting in a total routing buffer of approximately 256 packets.

A Dummynet box introduces a 40ms round-trip delay (RTT) on the bottleneck link. All senders transmit data traffic to their corresponding receivers (e.g., s1 => r1 and s2 => r2) at the same time.

The bottleneck link has a 1Gbps bandwidth, and the TCP traffic from the two senders will experience congestion at the rt1 node's output port.

test config

root@s1:~ # ping -c 5 r1 PING r1-link5 (10.1.4.3): 56 data bytes 64 bytes from 10.1.4.3: icmp_seq=0 ttl=62 time=40.313 ms 64 bytes from 10.1.4.3: icmp_seq=1 ttl=62 time=40.186 ms 64 bytes from 10.1.4.3: icmp_seq=2 ttl=62 time=40.233 ms 64 bytes from 10.1.4.3: icmp_seq=3 ttl=62 time=40.150 ms 64 bytes from 10.1.4.3: icmp_seq=4 ttl=62 time=40.250 ms --- r1-link5 ping statistics --- 5 packets transmitted, 5 packets received, 0.0% packet loss round-trip min/avg/max/stddev = 40.150/40.227/40.313/0.056 ms root@s1:~ # root@s2:~ # ping -c 5 r2 PING r2-link6 (10.1.1.3): 56 data bytes 64 bytes from 10.1.1.3: icmp_seq=0 ttl=62 time=40.228 ms 64 bytes from 10.1.1.3: icmp_seq=1 ttl=62 time=40.249 ms 64 bytes from 10.1.1.3: icmp_seq=2 ttl=62 time=40.094 ms 64 bytes from 10.1.1.3: icmp_seq=3 ttl=62 time=40.571 ms 64 bytes from 10.1.1.3: icmp_seq=4 ttl=62 time=40.188 ms --- r2-link6 ping statistics --- 5 packets transmitted, 5 packets received, 0.0% packet loss round-trip min/avg/max/stddev = 40.094/40.266/40.571/0.161 ms root@s2:~ #

- sender/receiver sysctl tuning

root@s1:~ # cat /etc/sysctl.conf ... net.inet.tcp.hostcache.enable=0 kern.ipc.maxsockbuf=16777216 net.inet.tcp.sendbuf_max=16777216 net.inet.tcp.recvbuf_max=16777216 root@r1:~# cat /etc/sysctl.conf ... # allow testing with 256MB buffers net.core.rmem_max = 268435456 net.core.wmem_max = 268435456 # increase Linux autotuning TCP buffer limit to 256MB net.ipv4.tcp_rmem = 4096 131072 268435456 net.ipv4.tcp_wmem = 4096 16384 268435456 # don't cache ssthresh from previous connection net.ipv4.tcp_no_metrics_save = 1

- routers shallow buffer tuning

root@rt1:~# /sbin/ethtool -g eth5

Ring parameters for eth5:

Pre-set maximums:

RX: 4096

RX Mini: 0

RX Jumbo: 0

TX: 4096

Current hardware settings:

RX: 128

RX Mini: 0

RX Jumbo: 0

TX: 128

root@rt1:~#

root@rt1:~# ifconfig eth5

eth5: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.1.3.2 netmask 255.255.255.0 broadcast 10.1.3.255

inet6 fe80::204:23ff:feb7:17c9 prefixlen 64 scopeid 0x20<link>

ether 00:04:23:b7:17:c9 txqueuelen 128 (Ethernet)

RX packets 186 bytes 18000 (18.0 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 196 bytes 19354 (19.3 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

root@rt1:~#

root@rt1:~# /sbin/tc qdisc show dev eth5

qdisc pfifo 8005: root refcnt 2 limit 128p

root@rt1:~#- switch to the FreeBSD RACK TCP stack

root@s1:~ # kldstat Id Refs Address Size Name 1 14 0xffffffff80200000 23a57e8 kernel 2 1 0xffffffff83200000 3cb1f8 zfs.ko 3 1 0xffffffff83020000 31e48 tcp_rack.ko 4 1 0xffffffff83052000 e0f0 tcphpts.ko root@s1:~ # root@s1:~ # sysctl net.inet.tcp.functions_default=rack net.inet.tcp.functions_default: freebsd -> rack root@s1:~ #

senders' kernel info |

FreeBSD 15.0-CURRENT c6767dc1f236: Thu Jan 30 2025 |

routers' kernel info |

Ubuntu 18.04.5 LTS (GNU/Linux 4.15.0-117-generic x86_64) |

receivers' kernel info |

Ubuntu 22.04.2 LTS (GNU/Linux 5.15.0-122-generic x86_64) |

- iperf3 command

iperf3 -B ${src} --cport ${tcp_port} -c ${dst} -l 1M -t 200 -i 1 -f m -VC ${name}

test result

- highlighted performance stats ()

TCP congestion control algo |

average link utilization over the 200s test (1Gbps bottleneck) |

peer compare |

Linux CUBIC |

846 Mbits/sec (2nd iter) |

base |

FreeBSD default stack CUBIC |

470 Mbits/sec (2nd iter) |

-44.4% |

FreeBSD RACK stack CUBIC |

505 Mbits/sec (2nd iter) |

-40.3% |

Linux newreno |

695 Mbits/sec (1st iter) |

base |

FreeBSD default stack newreno |

301 Mbits/sec (1st iter) |

-56.7% |

FreeBSD RACK stack newreno |

444 Mbits/sec (3rd iter) |

-36.1% |

- throughput and congestion window of CUBIC in Linux TCP stack

TCP throughput:  TCP congestion window:

TCP congestion window:

- throughput and congestion window of CUBIC in FreeBSD default TCP stack

TCP throughput:  TCP congestion window:

TCP congestion window:

- throughput and congestion window of CUBIC in FreeBSD RACK TCP stack

TCP throughput:  TCP congestion window:

TCP congestion window:

throughput and congestion window of NewReno in Linux TCP stack

TCP throughput:  TCP congestion window:

TCP congestion window:

throughput and congestion window of NewReno in FreeBSD default TCP stack

TCP throughput:  TCP congestion window:

TCP congestion window:

throughput and congestion window of NewReno in FreeBSD RACK TCP stack

TCP throughput:  TCP congestion window:

TCP congestion window:

VM environment with a Ethernet switch

single path test config

Virtual machines (VMs) as TCP traffic senders using iperf3 are hosted by Bhyve in a physical box (Beelink SER5 AMD Mini PC). This box uses FreeBSD 14.2 release. Another box that is the same type uses Ubuntu Linux. The two boxes are connected through a 5-Port Gigabit Ethernet Switch (TP-Link TL-SG105). The switch has a shared 1Mb Packet Buffer Memory, which is just 2.5% of the 5 Mbytes BDP (1000Mbps x 40ms).

In each test, only one TCP traffic sender is used. FreeBSD VM n1fbsd , and then Linux VM n1ubuntu24 is used to send TCP data traffic through the same physical link to the Linux box receiver Linux. A 40ms delay is added at the Linux receiver.

There is occasional TCP packet drops and we can evaluate congestion control performance. The minimum bandwidth delay product (BDP) is 1000Mbps x 40ms == 5 Mbytes.

- additional 40ms latency is added/emulated in the receiver

cc@Linux:~ % sudo tc qdisc add dev enp1s0 root netem delay 40ms cc@Linux:~ % tc qdisc show dev enp1s0 qdisc netem 8001: root refcnt 2 limit 1000 delay 40ms cc@Linux:~ % root@n1fbsd:~ # ping -c 4 -S n1fbsd Linux PING Linux (192.168.50.46) from n1fbsdvm: 56 data bytes 64 bytes from 192.168.50.46: icmp_seq=0 ttl=64 time=41.171 ms 64 bytes from 192.168.50.46: icmp_seq=1 ttl=64 time=41.231 ms 64 bytes from 192.168.50.46: icmp_seq=2 ttl=64 time=41.226 ms 64 bytes from 192.168.50.46: icmp_seq=3 ttl=64 time=41.354 ms --- Linux ping statistics --- 4 packets transmitted, 4 packets received, 0.0% packet loss round-trip min/avg/max/stddev = 41.171/41.245/41.354/0.067 ms root@n1fbsd:~ # root@n1ubuntu24:~ # ping -c 4 -I 192.168.50.161 Linux PING Linux (192.168.50.46) from 192.168.50.161 : 56(84) bytes of data. 64 bytes from Linux (192.168.50.46): icmp_seq=1 ttl=64 time=40.9 ms 64 bytes from Linux (192.168.50.46): icmp_seq=2 ttl=64 time=40.9 ms 64 bytes from Linux (192.168.50.46): icmp_seq=3 ttl=64 time=41.0 ms 64 bytes from Linux (192.168.50.46): icmp_seq=4 ttl=64 time=41.1 ms --- Linux ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 3061ms rtt min/avg/max/mdev = 40.905/40.981/41.108/0.078 ms root@n1ubuntu24:~ #

- sender/receiver sysctl tuning (max buffer 10MB)

root@n1fbsd:~ # sysctl -f /etc/sysctl.conf net.inet.tcp.hostcache.enable: 0 -> 0 kern.ipc.maxsockbuf: 10485760 -> 10485760 net.inet.tcp.sendbuf_max: 10485760 -> 10485760 net.inet.tcp.recvbuf_max: 10485760 -> 10485760 root@n1fbsd:~ # root@n1ubuntu24:~ # sysctl -p net.core.rmem_max = 10485760 net.core.wmem_max = 10485760 net.ipv4.tcp_rmem = 4096 131072 10485760 net.ipv4.tcp_wmem = 4096 16384 10485760 net.ipv4.tcp_no_metrics_save = 1 root@n1ubuntu24:~ # cc@Linux:~ % sudo sysctl -p net.core.rmem_max = 10485760 net.core.wmem_max = 10485760 net.ipv4.tcp_rmem = 4096 131072 10485760 net.ipv4.tcp_wmem = 4096 16384 10485760 net.ipv4.tcp_no_metrics_save = 1 cc@Linux:~ %

- switch to the FreeBSD RACK TCP stack

root@n1fbsd:~ # kldstat Id Refs Address Size Name 1 5 0xffffffff80200000 1f75ca0 kernel 2 1 0xffffffff82810000 368d8 tcp_rack.ko 3 1 0xffffffff82847000 f0f0 tcphpts.ko root@n1fbsd:~ # sysctl net.inet.tcp.functions_default net.inet.tcp.functions_default: rack root@n1fbsd:~ #

FreeBSD sender info |

FreeBSD 15.0-CURRENT main-87eaa30e9b39: Wed Mar 12 2025 |

Linux sender info |

Ubuntu server 24.04.2 LTS (GNU/Linux 6.8.0-55-generic x86_64) |

Linux receiver info |

Ubuntu desktop 24.04.2 LTS (GNU/Linux 6.11.0-17-generic x86_64) |

- iperf3 command

iperf3 -B ${src} --cport ${tcp_port} -c ${dst} -p 5201 -l 1M -t 300 -i 1 -f m -VC ${name}

test result

- highlighted performance stats (FreeBSD congestion controls performs worse than peers in Linux with a large margin)

TCP congestion control algo |

iperf3 300 seconds average throughput |

Linux CUBIC |

725 Mbits/sec (base) |

FreeBSD default stack CUBIC |

762 Mbits/sec (+5.1%) |

FreeBSD RACK stack CUBIC |

730 Mbits/sec (+0.7%) |

|

|

Linux newreno |

656 Mbits/sec (base) |

FreeBSD default stack newreno |

765 Mbits/sec (+16.6%) |

FreeBSD RACK stack newreno |

501 Mbits/sec (-23.6%) |

congestion window and throughput of CUBIC & NewReno in FreeBSD default TCP stack

TCP congestion window:  TCP throughput:

TCP throughput:

congestion window and throughput of CUBIC & NewReno in FreeBSD RACK TCP stack

TCP congestion window:  TCP throughput:

TCP throughput:

congestion window and throughput of CUBIC & NewReno in Linux

TCP congestion window:  TCP throughput:

TCP throughput:

tri-point topology test config

Two virtual machines (VMs) as traffic senders are hosted by Bhyve in two separate physical boxs (Beelink SER5 AMD Mini PC). The Bhyve hosts are running in FreeBSD 14.2 release OS. The traffic starting time of these two senders has a 2 seconds diff to show convergence towards traffic fairness.

A single traffic receiver is running in Ubuntu Linux 24.04 LTS. The traffic receiver is a physical box (same Beelink box) for the simplicity of a tri-point topology.

The three physical boxes are connected through a 5 Port Gigabit Ethernet Switch (TP-Link TL-SG105). The switch has a shared 1Mb (0.125MB) Packet Buffer Memory, which is just 2.5% of the 5 Mbytes BDP (1000Mbps x 40ms).

- additional 40ms latency is added/emulated in the receiver

cc@Linux:~$ sudo tc qdisc add dev enp1s0 root netem delay 40ms cc@Linux:~$ cc@Linux:~$ tc qdisc show dev enp1s0 qdisc netem 8001: root refcnt 2 limit 1000 delay 40ms cc@Linux:~$ root@n1fbsd:~ # ping -c 5 -S n1fbsd Linux PING Linux (192.168.50.46) from n1fbsdvm: 56 data bytes 64 bytes from 192.168.50.46: icmp_seq=0 ttl=64 time=41.169 ms 64 bytes from 192.168.50.46: icmp_seq=1 ttl=64 time=41.329 ms 64 bytes from 192.168.50.46: icmp_seq=2 ttl=64 time=41.399 ms 64 bytes from 192.168.50.46: icmp_seq=3 ttl=64 time=41.275 ms 64 bytes from 192.168.50.46: icmp_seq=4 ttl=64 time=41.239 ms --- Linux ping statistics --- 5 packets transmitted, 5 packets received, 0.0% packet loss round-trip min/avg/max/stddev = 41.169/41.282/41.399/0.078 ms root@n1fbsd:~ # root@n2fbsd:~ # ping -c 5 -S n2fbsd Linux PING Linux (192.168.50.46) from n2fbsdvm: 56 data bytes 64 bytes from 192.168.50.46: icmp_seq=0 ttl=64 time=40.956 ms 64 bytes from 192.168.50.46: icmp_seq=1 ttl=64 time=41.377 ms 64 bytes from 192.168.50.46: icmp_seq=2 ttl=64 time=41.496 ms 64 bytes from 192.168.50.46: icmp_seq=3 ttl=64 time=41.262 ms 64 bytes from 192.168.50.46: icmp_seq=4 ttl=64 time=41.615 ms --- Linux ping statistics --- 5 packets transmitted, 5 packets received, 0.0% packet loss round-trip min/avg/max/stddev = 40.956/41.341/41.615/0.226 ms root@n2fbsd:~ #

- sender/receiver sysctl tuning

n1fbsd and n2fbsd: cat /etc/sysctl.conf ... # Don't cache ssthresh from previous connection net.inet.tcp.hostcache.enable=0 # In crease FreeBSD maximum socket buffer size up to 128MB kern.ipc.maxsockbuf=134217728 # Increase FreeBSD Max size of automatic send/receive buffer up to 128MB net.inet.tcp.sendbuf_max=134217728 net.inet.tcp.recvbuf_max=134217728 root@n1fbsd:~ # n1linux and n2linux: cat /etc/sysctl.conf ... # To support 10Gbps on paths of 100ms, 120MB of buffer must be available. net.core.rmem_max = 134217728 net.core.wmem_max = 134217728 # Increase Linux autotuning TCP buffer max up to 128MB buffers net.ipv4.tcp_rmem = 4096 131072 134217728 net.ipv4.tcp_wmem = 4096 16384 134217728 # Don't cache ssthresh from previous connection net.ipv4.tcp_no_metrics_save = 1 cc@Linux:~$ cat /etc/sysctl.conf ... # allow testing with 256MB buffers net.core.rmem_max = 268435456 net.core.wmem_max = 268435456 # increase Linux autotuning TCP buffer limit to 256MB net.ipv4.tcp_rmem = 4096 131072 268435456 net.ipv4.tcp_wmem = 4096 16384 268435456 # don't cache ssthresh from previous connection net.ipv4.tcp_no_metrics_save = 1 cc@Linux:~$

FreeBSD senders' kernel info |

FreeBSD 15.0-CURRENT main-06016adaccca: Wed Feb 19 EST 2025 |

Linux senders' kernel info |

Ubuntu 22.04.3 LTS (GNU/Linux 5.15.0-133-generic x86_64) |

receiver's kernel info |

Ubuntu 24.04.2 LTS (GNU/Linux 6.11.0-17-generic x86_64) |

- iperf3 command (for sender2, there is a 3 seconds delay)

iperf3 -s -p 5201

iperf3 -B ${src} --cport ${tcp_port} -c ${dst} -p 5201 -l 1M -t 200 -i 1 -f m -VC ${name}

iperf3 -s -p 5202

sleep 3; iperf3 -B ${src} --cport ${tcp_port} -c ${dst} -p 5202 -l 1M -t 200 -i 1 -f m -VC ${name}

test result

- highlighted performance stats

TCP congestion control algo |

average link utilization over the 200s test (1Gbps bottleneck) |

peer compare |

Linux CUBIC |

60.7 Mbits/sec (5nd iter) |

base |

FreeBSD default stack CUBIC |

65.7 Mbits/sec (2nd iter) |

+8.2% |

FreeBSD RACK stack CUBIC |

110.9 Mbits/sec (3rd iter) |

+82.7% |

- throughput and congestion window of CUBIC in FreeBSD default TCP stack

TCP throughput:  TCP congestion window:

TCP congestion window:

- throughput and congestion window of CUBIC in FreeBSD RACK TCP stack

TCP throughput:  TCP congestion window:

TCP congestion window:

- throughput and congestion window of CUBIC in Linux TCP stack

TCP throughput:  TCP congestion window:

TCP congestion window: